Cuda Implementation of Collaborative Filtering

Implementations

Serial Version

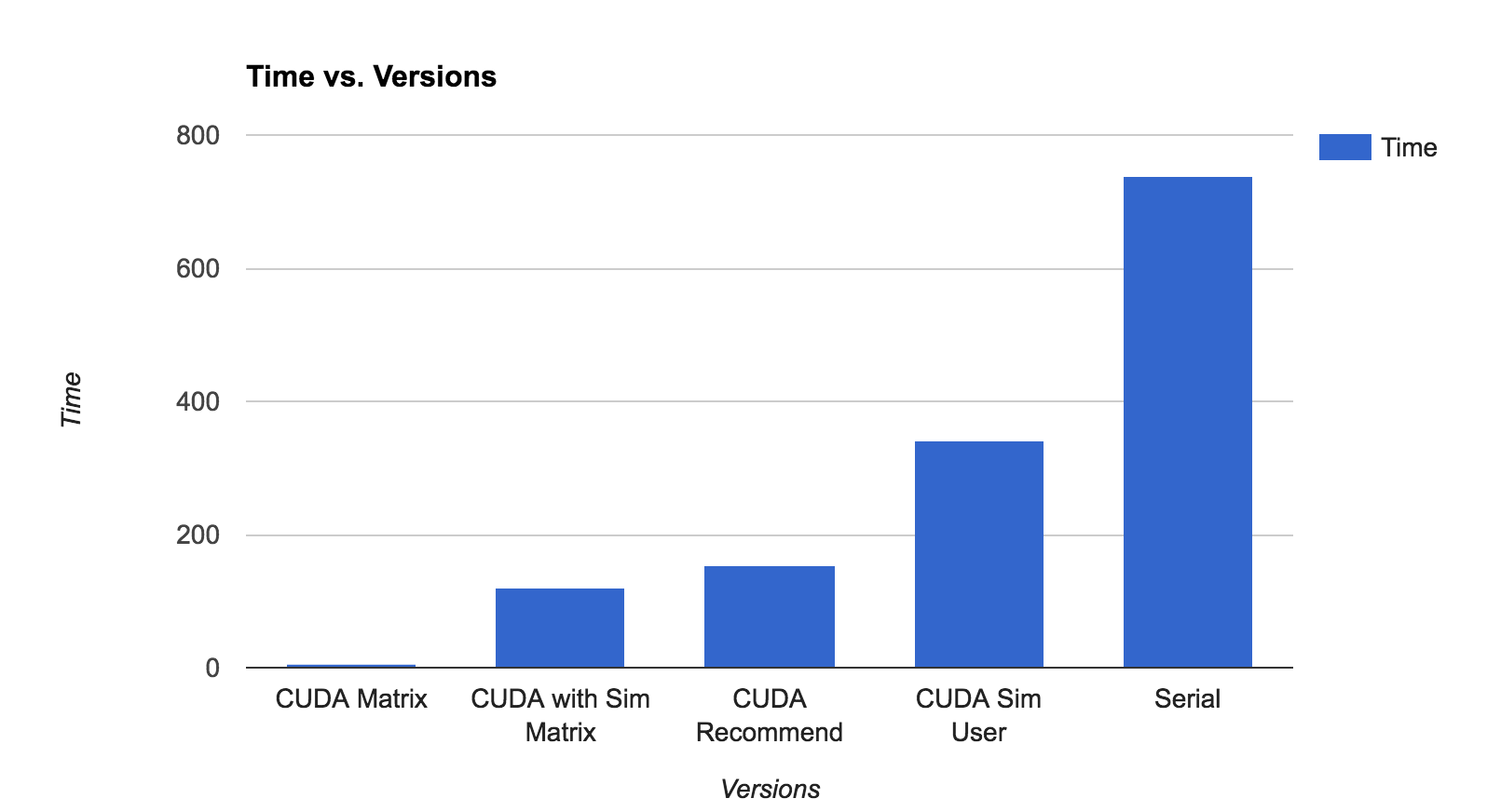

For each of the users, we first calculate the collrelation between the user and all other users and we get a list of correlations results. Then for each of the user’s unrated items, we calculate the score of that item by summing all the products of the users who rated the item with their ratings. Then we sort the resulting list and return the 5 items with the highest sums. After running it on the ml-1M dataset, it perfomed 6040 recommendations using 737.455 seconds.

Parallelizing the Correlation Calculation

We first try to improve the program by parallelizing the correlation calculation process. We name the matrix that contains all the ratings of the users as the rating matrix. The rating matrix has ITEM_COUNT columns and USER_COUNT rows. In order to populate the correlation scores for user A, we use user A’s row in the rating matrix and calculate its correlation using the transpose of the rating matrix. We can parallelize this process by dividing the rating matrix in to chunks and mapping them to threads on the GPU. During the process, we found out that threads can actually share a huge part of the data, so we decided to store them into shared memory. Just as shown in the figure, the blue part can be stored in the shared memory since it will be shared when computing the orange block. After running it on the ml-1M dataset, it perfomed 6040 recommendations using 341.807 seconds. We can definitely do better than a 50% speedup, so we decided to futher parallelize it.

Parallelizing the Recommendation Calculation

The next thing that we tried is to parallelize the recommendation process. We identified that the recommendation process is just calculating the matrix vector product of the list of similarity for user A and the rating matrix. Using similar methods, we stored parts of the vector in the shared memory to improve the arithmetic intensity. After running it on the ml-1M dataset, it perfomed 6040 recommendations using 152.90467 seconds. We were confused why it only acheived 4x speedup. We had a hypothesis that calling the same CUDA over and over again will cause the application bounded by the memory system since we are constantly coping the user rating matrix from the host to the device.

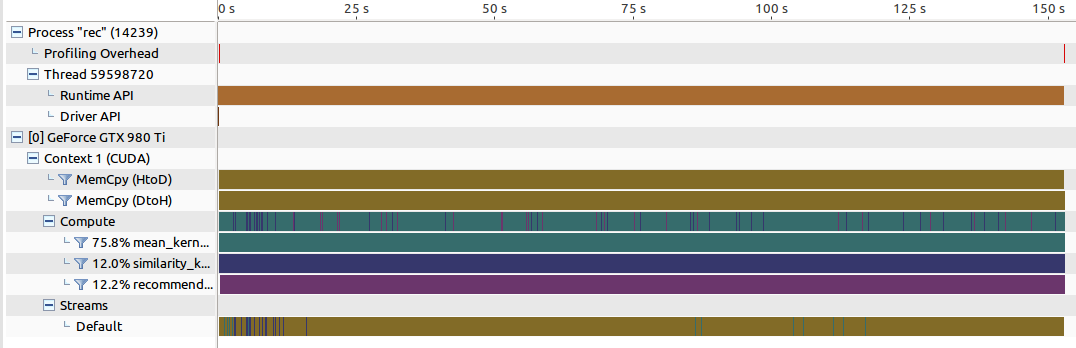

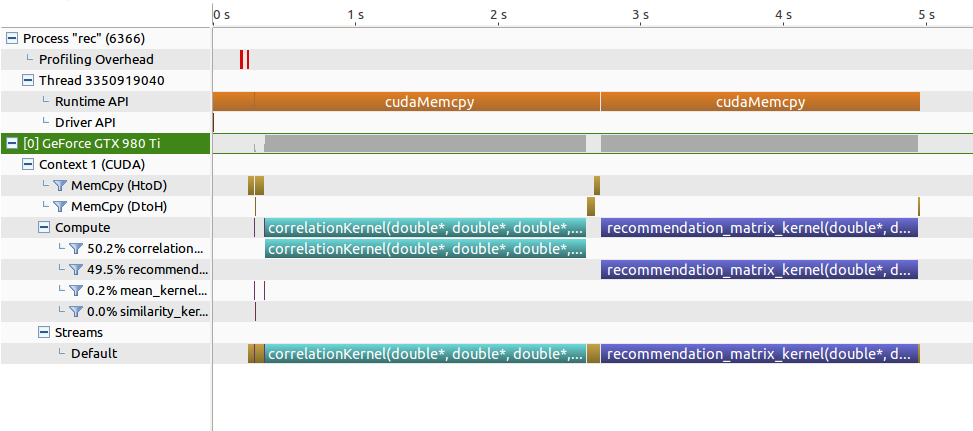

In order to verify our hypothesis, we decided to do a detailed benchmark of the program using the Nvidia Visual Profiler. From the timeline we can see that the program was constantly moving data between the device and the host.

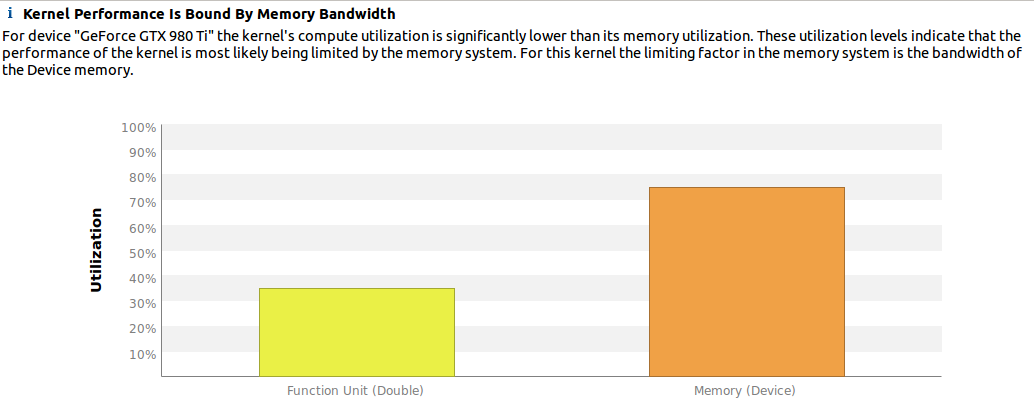

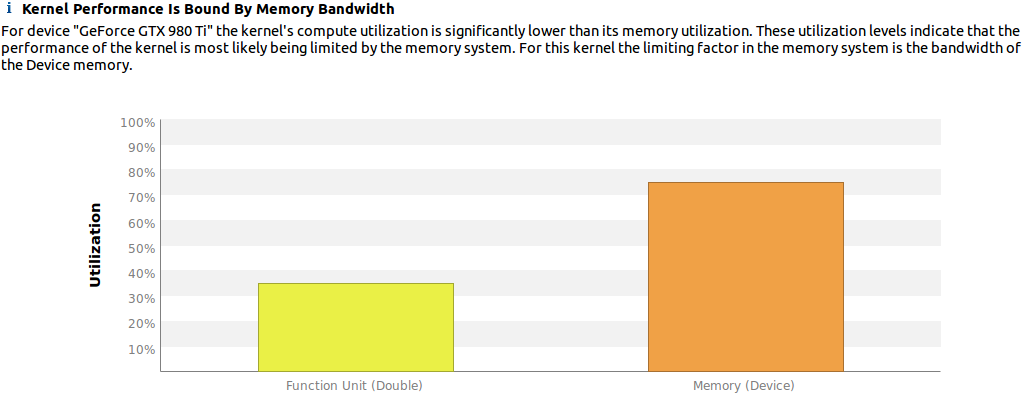

After performing a kernel analysis on the recommendation kernel, we found out that the memory utilization on the device is a lot higher than the computational utilization. Thus, this proves our hypothesis that the program is memory bounded. So in order to improve, we should find ways to share data between kernel calls.

Further Parallelizing the Correlation Calculation

Then we found out that when calcualting the correaltion of the single user, a lot of the data from the rating matrix can be reused. So instead of calculating the correlation for a single user, we can calculate the correlation between all the users in parallel. This way, although it will take longer to compute this kernel, but we can reuse the data across all the recommendation calls. We can populate the correlation of all users using the rating matrix and the rating matrix transposed. Here we stored another transposed version of the rating matrix because we want to maintain spacial locality when accessing the columns. Similar to the previous correlation calculation kernel, two blocks can be stored in shared memory so that we can share data across threads and increase the arithmetic intensity. After running it on the ml-1M dataset, it perfomed 6040 recommendations using 119.90333 seconds. It did not acheive the speedup that we had in mind. In order to find the problem with this implementation, we headed back to the Nvidia Visual Profiler.

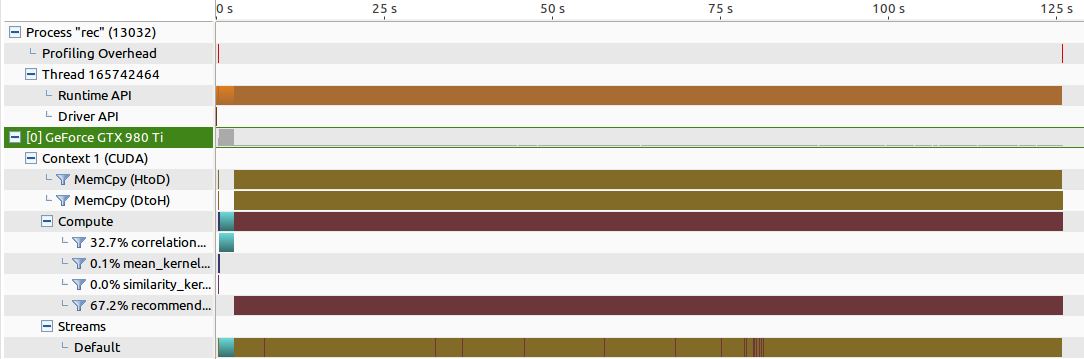

From the timeline we can see that the correlation kernel (cyan block) only took up 5% of the total time. Simlilar to what happed before, we can see that the program is still constantly moving data between the host and the device.

By performing a kernel analysis on the recommendation kernel we found out that although the memory utilization is a bit lower, it still takes up the majority of the timeline. This proves that the kernel is still memory bounded.

Further Parallelizing the Recommendation Calculation

After going over the code multiple times, we found the bottleneck of the whole program. In each recommendation kernel call, we copied over the whole rating matrix from the host to the device! We decided that we can share this data across all calls of the recommendation kernel and calculate the recommendations for all users in parallel and store it in memory. Then when each user requests a recommendation, we can just read if off from memory. After running it on the ml-1M dataset, it perfomed 6040 recommendations using only 5.59757 seconds. Thats a roughly 150X speedup compared to the serialized version.

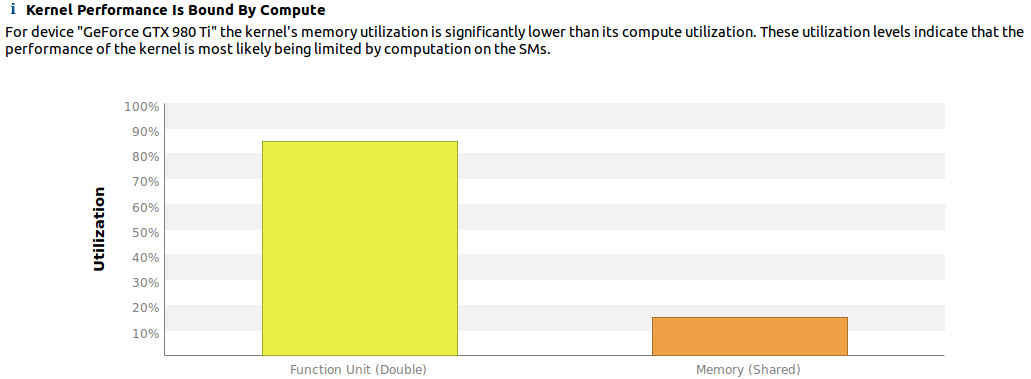

Heading back to the visual profiler we found out that for more than 90% of the time, the GPU is doing arithmetic computation. This shows that the program achieved a relatively high arithmetic intensity.

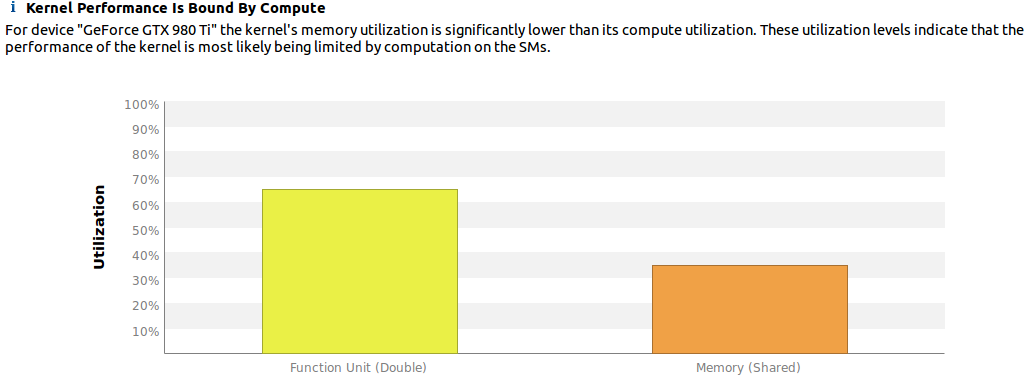

Looking at the two kernels individually we confirmed that both the kernels are bounded by computation. Since the only way to improve the computational power of the GPU is basically getting a better GPU, we know that we did a fine job parallelizing the program.

Results

By comparing the performance across different versions of the program we can see the effects of each small improvement.